In this blog post, I will be talking about Klov by ExtentReports. Klov is an extension service for ExtentReports which provides historical reporting and trend monitoring as well as every other feature that ExtentReports provides, such as; reporting, logging, taking screenshots on test failure, success or skip. The Github link for the latest version of Klov Server is:

https://github.com/extent-framework/klov-server

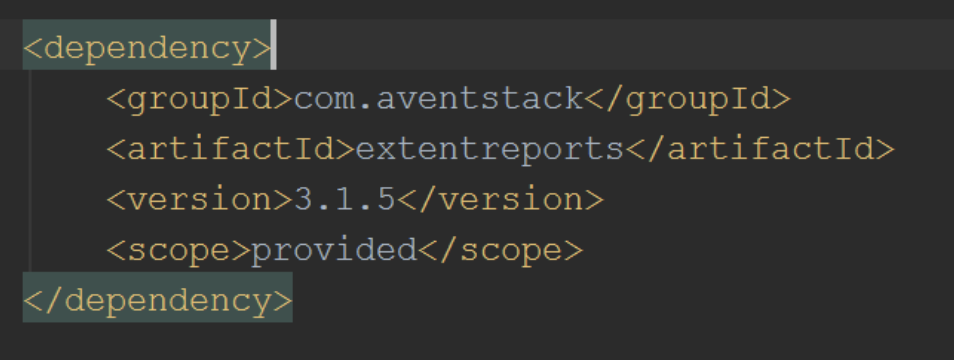

Unlike ExtentReports, Klov requires MongoDB to be installed and running on an available port, in order to store and access historical data. Optionally, Redis can also be used in parallel in order to utilize an additional in-memory database. The only other requirement aside of MongoDB, is of course, the ExtentReports core library, which can be acquired and integrated into your code as a Maven dependency.

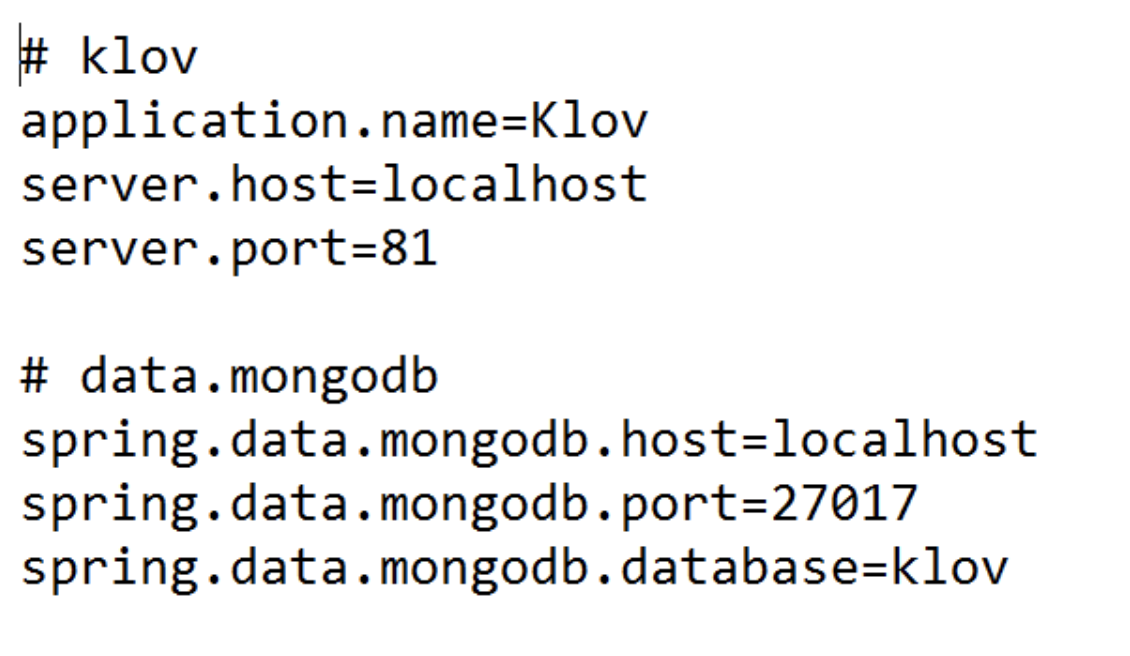

After installing and running MongoDB daemon on an available port by executing the cmd line “mongod”, the MongoDB environment settings for Klov should be configured according to the host and the port that the daemon is running on. This configuration, as well as the configuration settings for Klov itself, Redis and schedulers, can be done on “application.properties” file which is in the klov-server file folder.

We can now run Klov Server by running the jar file provided in the Klov file folder with

java –jar klov-x.x.x.jar

and login with the admin credentials: klovadmin/password.

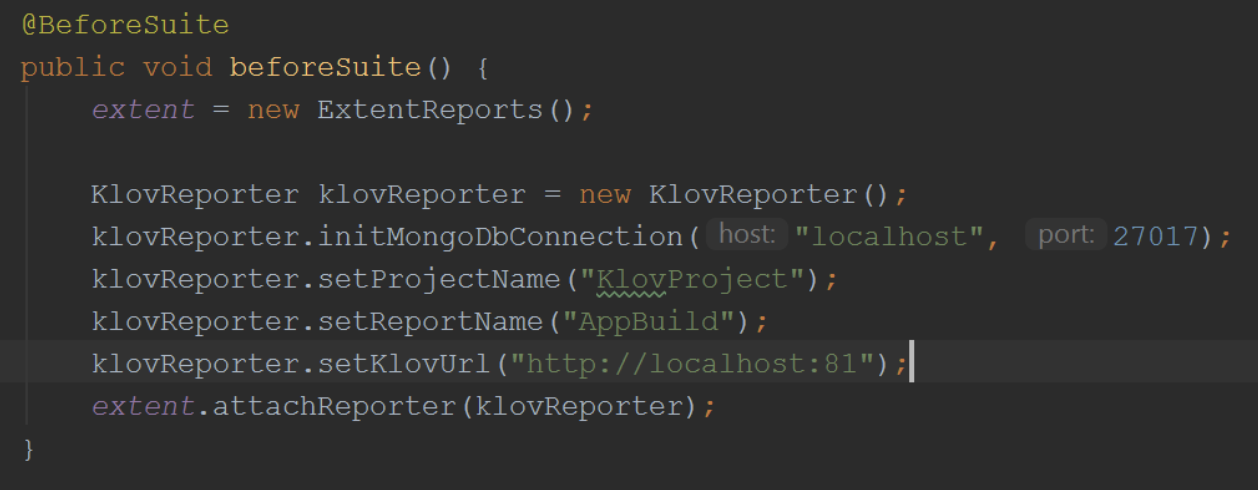

Since we haven’t added or run any projects on the server, there won’t be any project data to view; however, you will still be able to edit your user information. In order to link your project to the Klov Server, you should initialize ExtentReports and KlovReporter before your test script, set the required MongoDB and Klov connections and attach KlovReporter to ExtentReports, as shown below:

Using TestNG annotations such as @BeforeSuite and @AfterSuite in your test base class for these initializations and flush commands will be very useful.

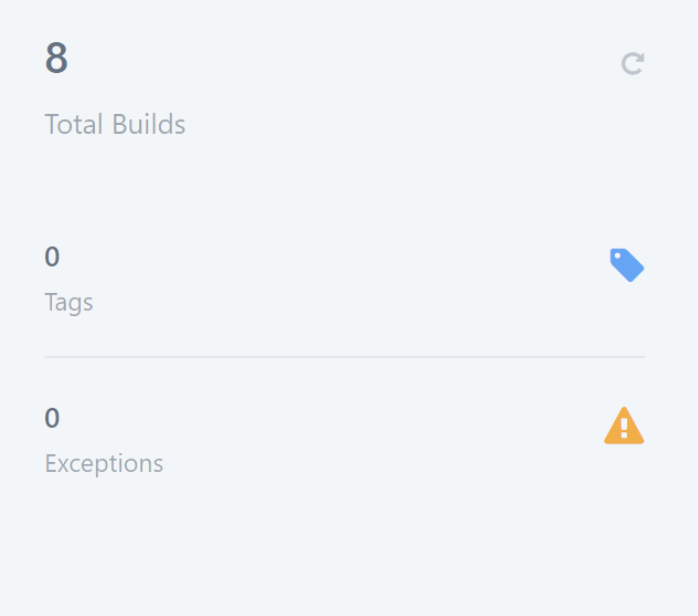

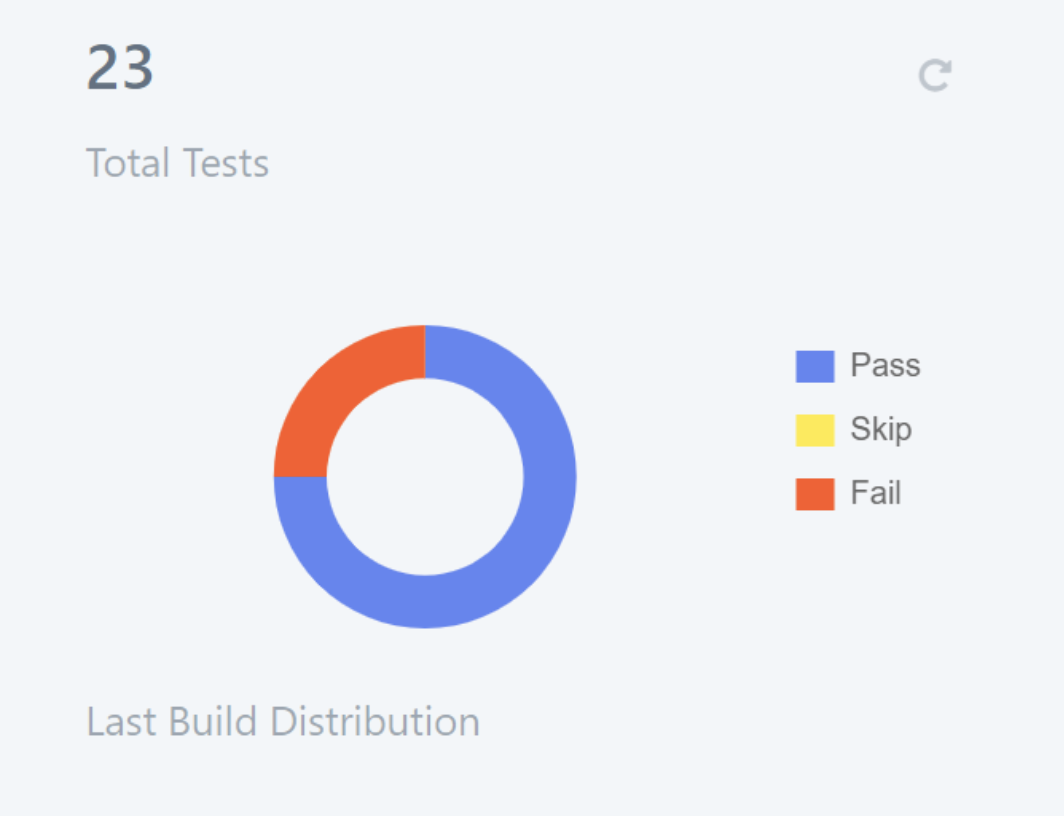

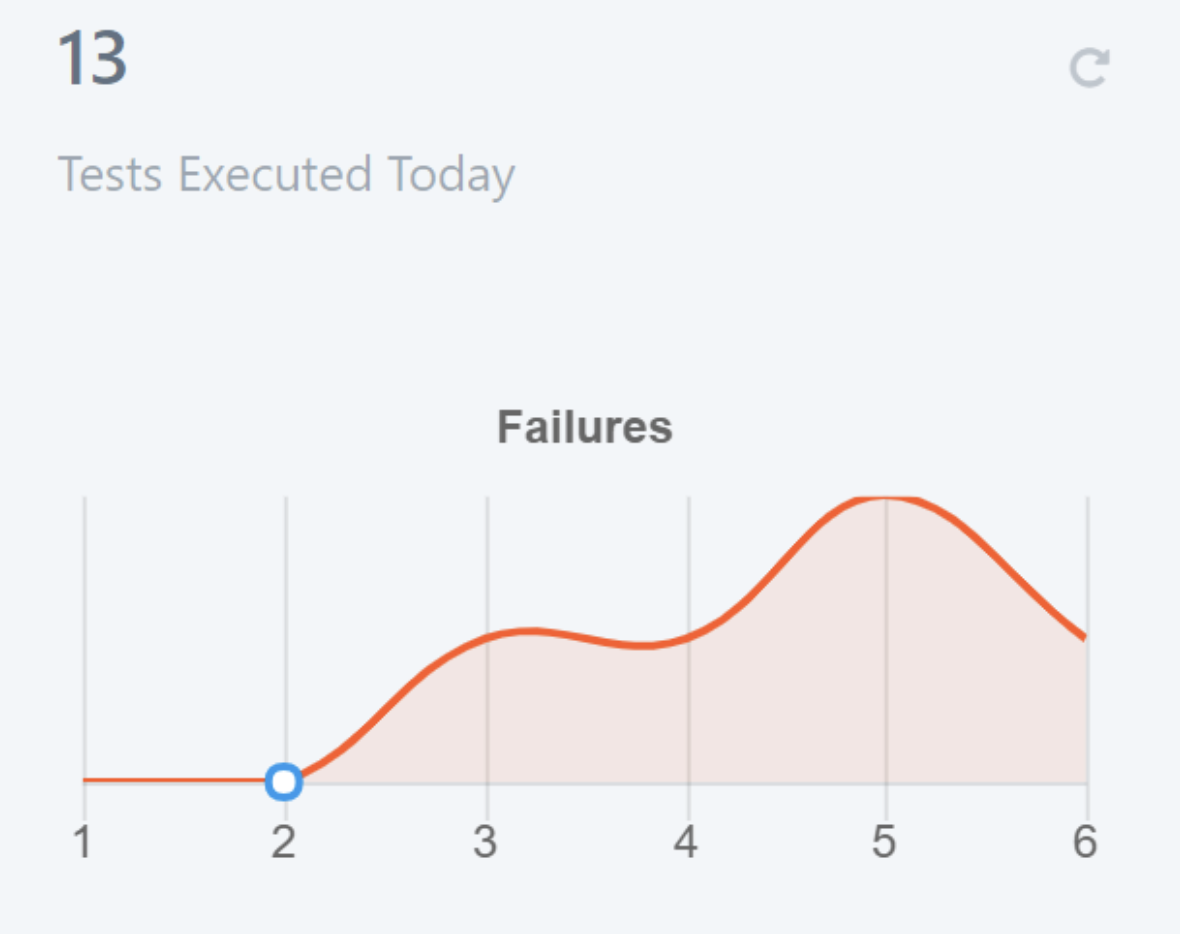

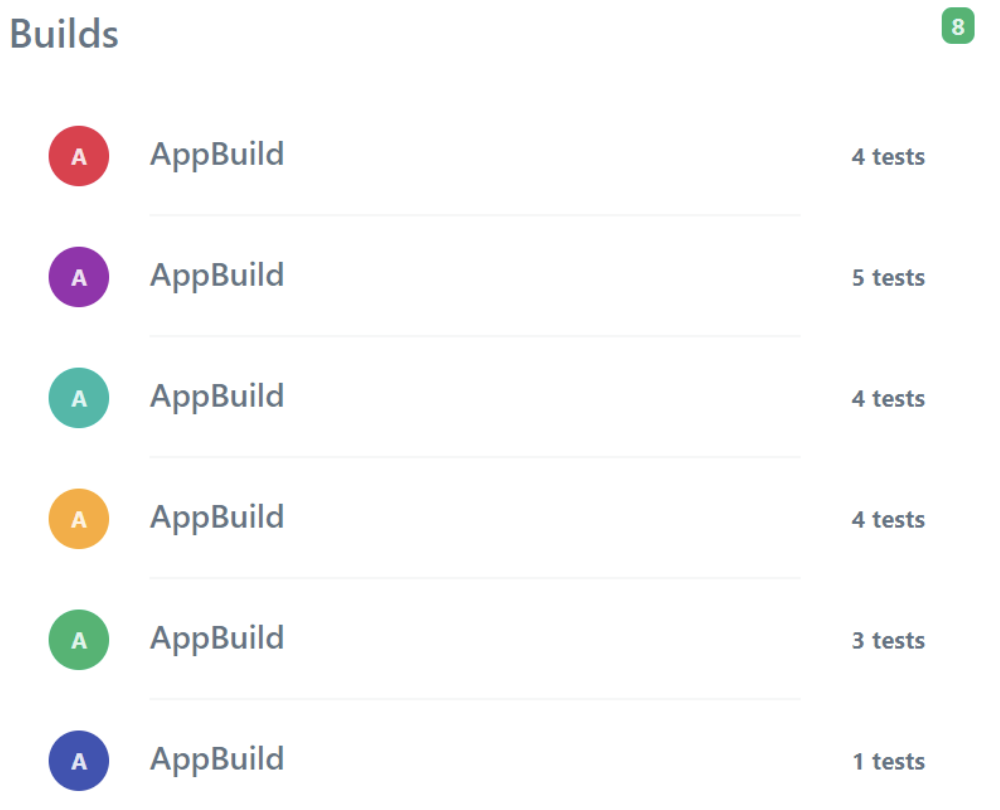

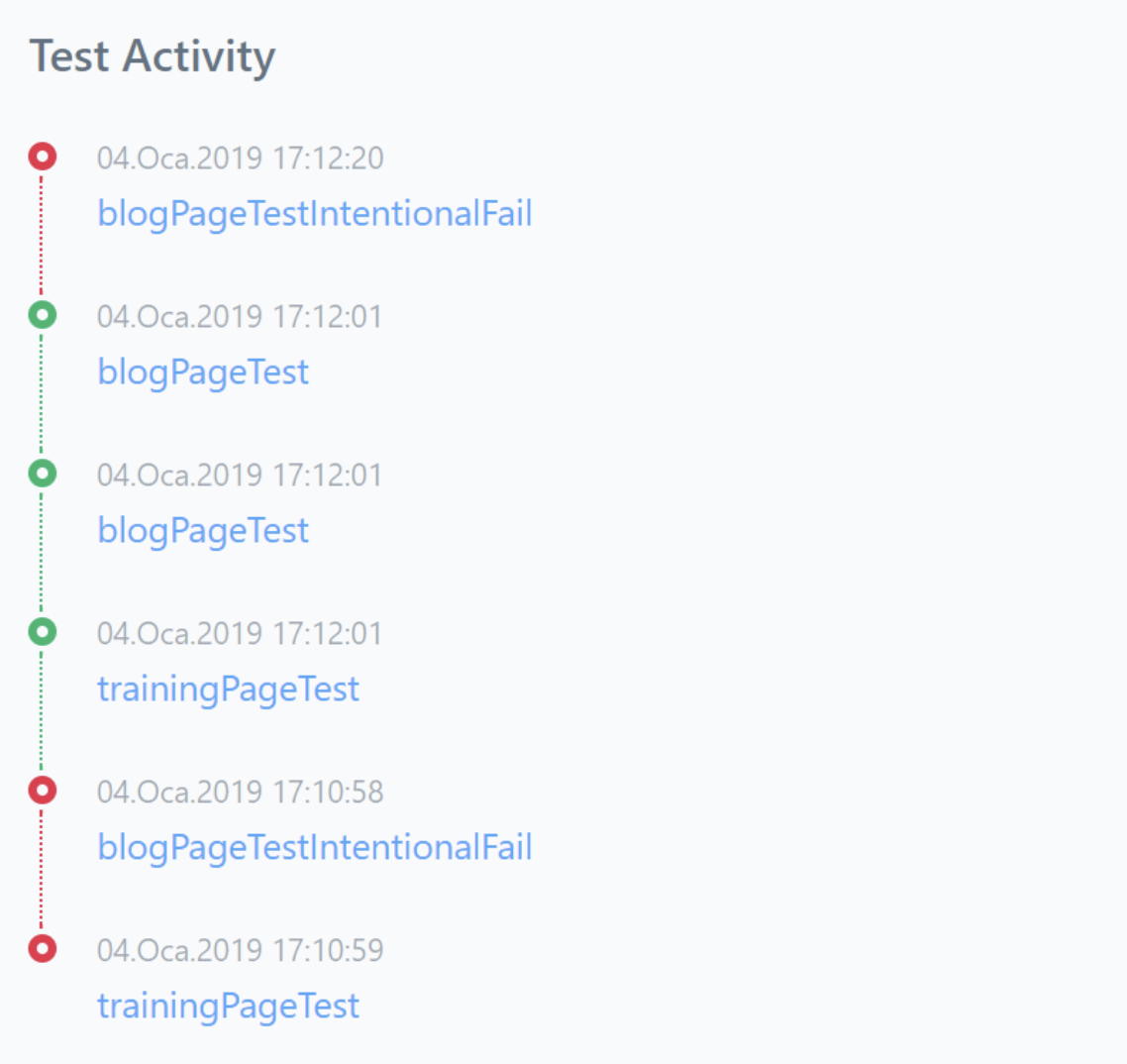

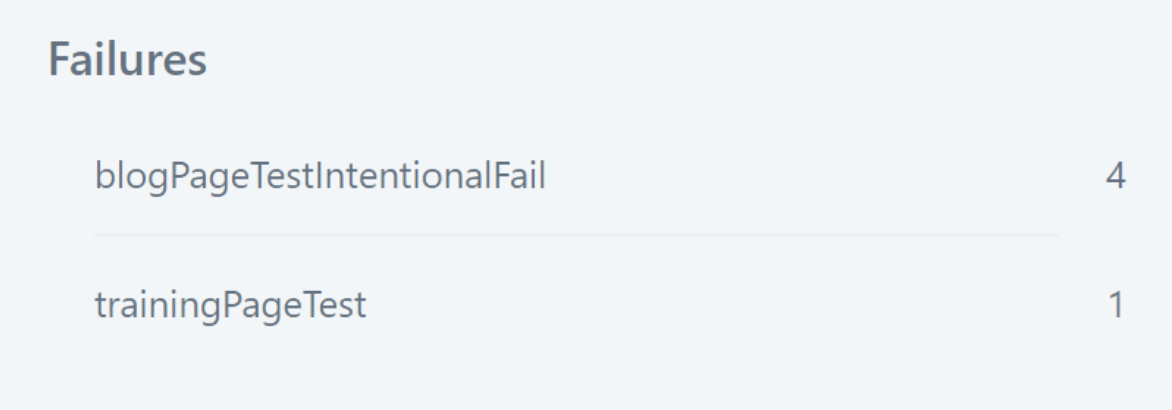

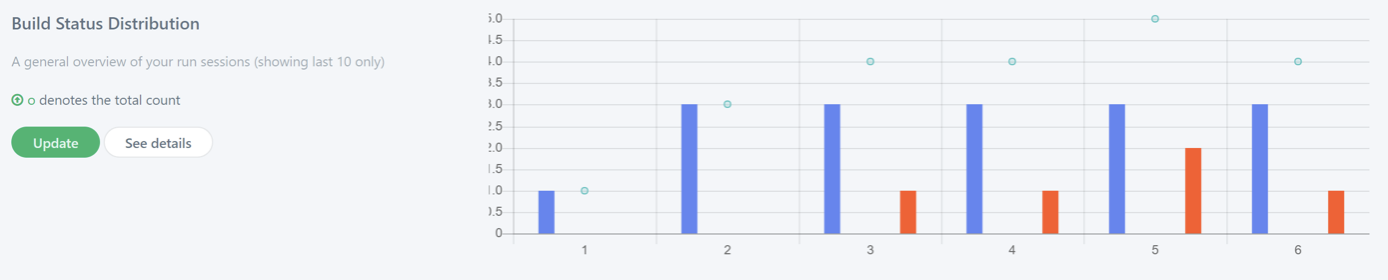

I’ve created a test suite and put varying amounts of simple tests that pass and fail in it for each build in order to view different results. After running the suite 6 times, I was able to see the varying results of each build on the “Dashboard” page, which seemed aesthetically pleasing and very informative. The page consists of 7 different subspaces that are shown below:

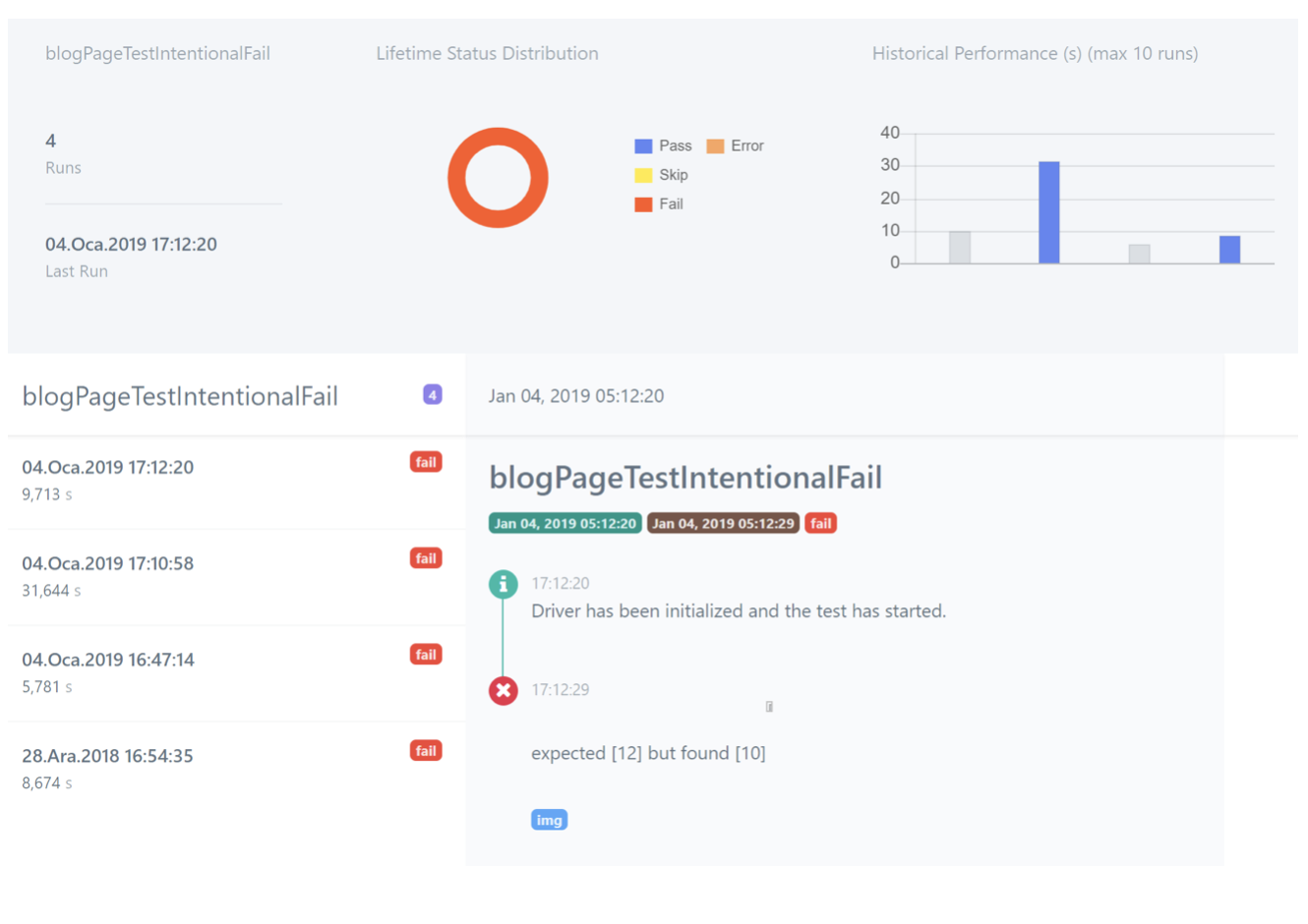

As you can see, all of these reports seem simple, informative and easy on the eyes. The logs can also be viewed by either selecting individual tests from “Test Activity” or the tests from “Builds” section. Here are the results for “blogPageTestIntentionalFail”:

In order to view builds, their pass/fail percentages and their test details, you can select the “Builds” tab from the list on the left:

If your development or test team values historical documentation and trend monitoring, you have to give Klov a shot.

Samet Nehir Gökçe

Software Development Engineer in Test

![[REVIEW] First Look at Klov Reporter](https://keytorc.com/wp-content/uploads/2019/01/1266x550px_lance-anderson-QdAAasrZhdk-unsplash.jpg)